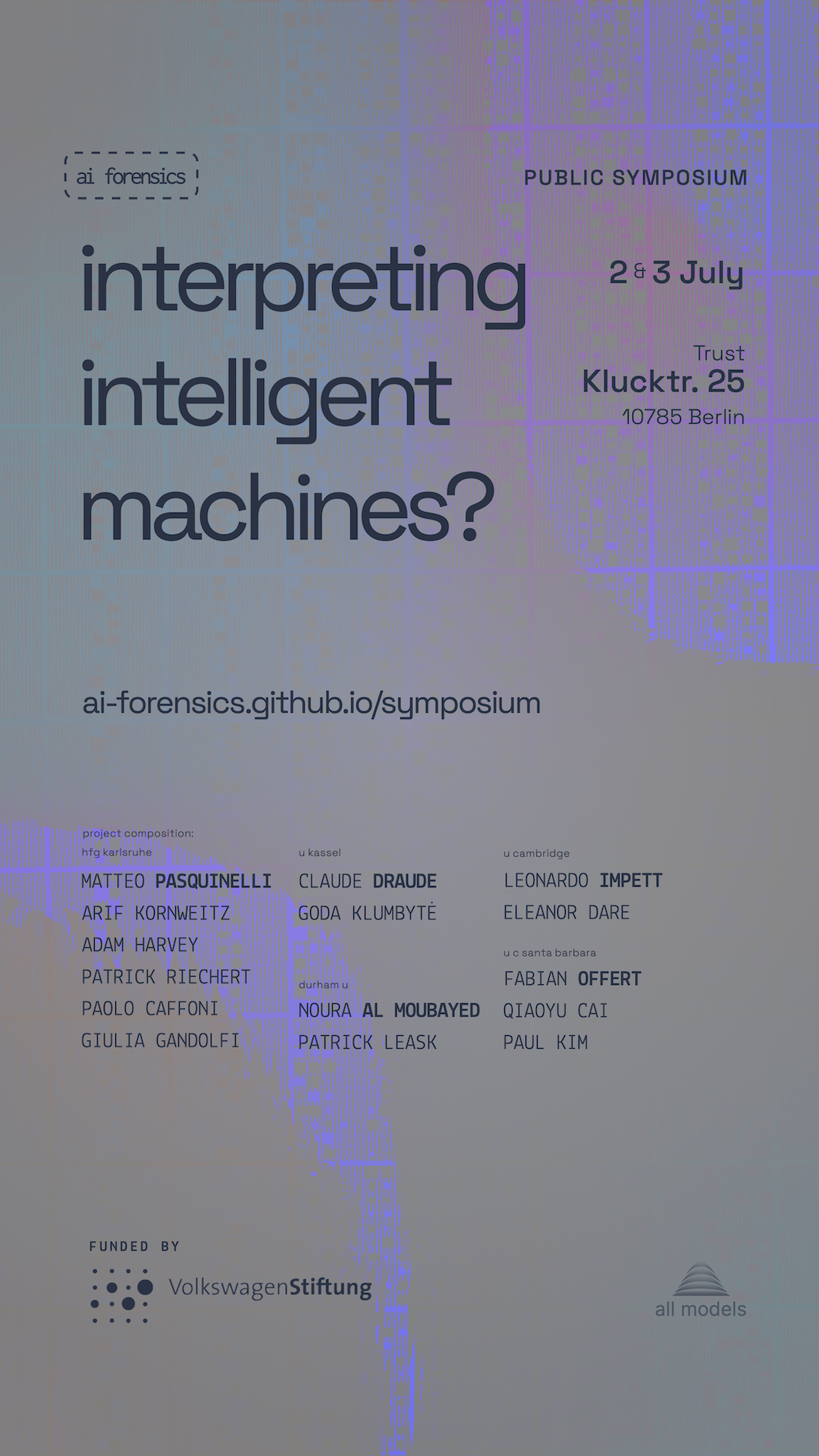

2 and 3 July, join the AI Forensics team for a two-day public symposium:

Interpreting intelligent machines?

What does it mean to interpret machines? What is language to a computer? What is the epistemology determining artificial intelligence? Is there an act of interpretation common to traditional hermeneutics and the nascent attempts to reverse engineer neural algorithms? According to which historical trajectories do social relations, processes of abstraction, definitions of intelligence, and predictive models configure one another? What epistemic horizons thereby impose themselves on us, and what modes of knowing could we mobilise in renewal?

Logistical information

The symposium will take place over two days—Wed., 2 July & Thu., 3 July at Trust in Berlin. There will be a mix of lectures, panel discussions, as well as more participatory, workshoop-esque formats.

- The symposium will be livestreamed.

- The link is here (opens Zoom).

- Please note: while it will of course be possible to ask questions or speak via stream, actively participating in the more open formats may be difficult.

- Selected recordings will also be made available after the fact.

- Changes to the schedule are possible! The programme on the current page will be kept up-to-date throughout.

- You can request reminders and updates per email through this form.

- Add the symposium to your calendar: Day 1 Day 2

Interpreting intelligent machines pt. 1

Trust, Kluckstraße 25, 10785 Berlinproject retrospective / transformers—models of? / cartography / labour / knowledges and practices of interpretability

- Opening

Arrival

10:00 Introduction

10:20TalkWhat is AI Forensics? A retrospective introduction

Lead PI Matteo Pasquinelli (HfG Karlsruhe/U Venice) opens the symposium: Why forensics?—The search for a methodology commensurate to the societal transformations wrought by AI. Exploding (the view of the) AI production pipeline. Characterising the project.

Discussion

Transformer hegemony

11:15PanelRevisiting milestones in the transformers’ ascent, these contributions offer critical reappraisals that complicate the eponymous catchphrase of attention being all you need. They put forth pieces of an ‘alternative chronology’ of these models.

Machine translation

seq2seq,NLLBPaolo Caffoni (HfG Karlsruhe) investigates tokenization as a metric of labour.

Visual culture

CLIPLeonardo Impett (U Cambridge) looks into OpenAI’s multimodalCLIPembedding model of visual culture.Discussion

Cartography—language models, labour, and the social

12:20roundtableCartography as methodology

Goda Klumbytė (U Kassel) introduces cartography as a method.

Intelligence, social relations, general intellect?

Matteo Pasquinelli (HfG Karlsruhe/U Venice): from the Maschinenfragment via Postoperaismo to the internet-scale compression task that is LLM training…

Discussion + collaborative mapping

- Break

Lunch

13:15 Vector epistemology

15:00PanelThe techniques and mechanisms underlying the shape of AI—and their form(s) of interpretation.

Vector media. Towards a materialist epistemology of AI?

Presenting work forthcoming (2025) on meson press by Leonardo Impett (U Cambridge/B Hertziana) and Fabian Offert (UCSB)

Mechanistic interpretability with sparse autoencoders

Patrick Leask (Durham U) contextualises the SAE approach within the wider frame of mechanistic interpretability, discussing also the significance recent findings (Leask et al, 2025)

Discussion

What kinds of knowledge and practice is interpreting AI?

- Break

Informal

17:00

Interpreting intelligent machines? pt. 2

Trust, Kluckstraße 25, 10785 Berlinhistorical epistemology / statistics / pedagogy / arts

Statistical normativities

11:00panelCalculating health

Giulia Gandolfi (HfG Karlsruhe) on the historical epistemology of correlational knowledge in medicine

AI interpretability and accountability in the humanitarian sector

Arif Kornweitz (HfG Karlsruhe) presents his PhD project—from the inception of prediction in political philosophy to the “hard problem of conflict prediction”

Discussion

- Break

Lunch

13:15 Learning otherwise

15:00panelWorlding beyond benchmarking

Eleanor Dare (U Cambridge) discusses worlding and arts-based research in the context of a series of pedagogical workshops on AI held in Cambridge, England

Tangibility and embodiment beyond the rational

Goda Klumbytė (U Kassel) on the need for new modes of knowing

Discussion

Adversariality

16:00DiscussionConcepts beyond explainability

Discussing adversarial techniques, including with Goda Klumbytė (U. Kassel) soeaking of the Beyond Explainability workshop

Announcements: Outputs (forthcoming)

16:45Two books, a workshop, and an anthology of boundary concepts—what the remainder of the project has in store_predictions_Interpretability interfaces

17:00Tutorials, followed by guided or freeform exploration with AI interpretability interfacesWorkshop